It was a Friday night and my friend and I were a few beers in. He was discussing his job and how they like to make a big deal about the fact that they are going "all digital" in some area, and in his inebriated state he stopped and asked "what does 'digital' even mean?" Luckily for him, I'm an electrical engineer and have spent most of my career dealing with analog and digital signals, so I drunkenly explained it to him as best I could.

The thing is, I'm sure a majority of people out there have the same lack of basic understanding regarding analog and digital signals, as the terms have become such buzzwords over the years that their real meaning has been obscured.

Colloquially, "digital" has come to mean "new fangled technology that's cool and exciting" whereas "analog" has come to mean "old timey stuff that doesn't work very well and is pretty much obsolete nowadays." These associations aren't completely wrong in many cases, but they hide many important facts about the nature of analog and digital. In this article I'll do my best to explain what the terms really mean, and what the future holds for each.

What Is Analog?

The term analog comes from the Greek word analogos, which is the same word that we get analogy from. An analog signal, similarly, can be thought of as an analogy to something physical. It's important to note that analog and digital can refer to more things than electrical signals, but I'll spend most of my time using these as examples since it's where they are most often used in everyday language.

The "trademark" of an analog signal, so to speak, is the fact that it is an exact representation of a physical quantity. Imagine that you want to duplicate the size and shape of your hand, so you put it over a piece of paper and trace it out with a pencil. The resulting outline of your hand can be thought of as an analog signal that represents your hand.

This seems good, right? Don't you want a signal to exactly represent whatever physical quantity you'd like to measure or duplicate? The answer is yes, this is good, but it also has some issues associated with it. If you trace your hand out with a pencil and zoom in on the final result, then you'll probably see places where the pencil slipped a little and the outline doesn't exactly look like your hand. This represents the main problem with analog signals, and the main reason why we don't see them as much as we used to: noise.

The little imperfections that come about when trying to duplicate a physical quantity are known as "noise", and noise is the bane of an analog engineer's existence. Most people are probably familiar with noise in the context of audio. Anyone who is older (or perhaps a young hipster) has probably listened to music on a vinyl record, which is a purely analog medium. The playback of a record will inevitably involve a little bit of the characteristic "snap, crackle, and pop", with perhaps some hissing as well. Both of these phenomena are examples of noise that is either produced from playing back the record or initially recording the audio. It's more than likely the former since great care is usually taken in recording to prevent noise.

Even though vinyl records can be noisy, audiophiles usually prefer them because they are a pure representation of the music, which is correct, since an analog signal is a pure representation of a physical quantity (in this case the physical quantity is pressure waves that produce sound). In the case of music (and other types of analog signals) if the means of capturing and playing back the signal are of a high enough quality, then the noise can be reduced substantially. So if you have a high quality record player and good records, then listening to vinyl is pretty much the best of all worlds. It is pretty inconvenient though...but that's another issue.

What Are Digital Signals?

In the analog world, nothing is completely black or white, everything exists as an infinite number of shades of gray (definitely more than fifty). In the digital world, things are either black or white; nothing in between.

The term "digital" comes from the Latin digitus, which literally translates to "finger; toe". This makes sense, as the number of fingers and toes you have is a discrete, finite value (hopefully you don't have a fractional number of fingers or toes...but if you do then...sorry). This brings about the main difference between analog and digital: an analog signal is indiscrete and continuous, whereas a digital signal is discrete and finite. An analog signal is loosey goosey, chill, laid back, whereas a digital signal is rigid, uptight, and severely lacks chill.

Going back to our hand-tracing example, imagine that you want to trace your hand but don't want to deal with the little noisy perturbations that arise from just tracing it. So you get some grid-lined paper and completely darken every square that touches your hand. The result is a digital representation of your hand (and probably a pretty bad one). But hey, you got rid of the noise!

Digital signal processing has many benefits. Not only can it eliminate noise, but it also has the convenience of being implemented in software, which allows for greater flexibility when processing. Analog signals, on the other hand, are almost exclusively processed using hardware, which can be bulky and difficult to change.

Digital signals are not without their difficulties, however. In our hand-tracing example, you can probably imagine that the patchwork of squares that results from "tracing" your hand probably wouldn't look too much like your hand if you used normal grid paper. You can imagine, though, that if the squares were really small, then it would probably look pretty similar to your hand, and may even look better than the analog trace since it wouldn't have any of the random noise. The number of squares on the paper would be referred to as the "resolution", which is probably a term you've heard before but didn't completely know what it meant. Having a finer resolution (in this example, more squares) gives us a better picture of our hand...but it would also take longer to trace and use up more memory if we stored it on a computer. This becomes a compromise in digital signal processing: you want enough resolution so that you can adequately represent your signal, but not so much that it becomes cumbersome.

What we've described in our hand tracing example is basically how digital pictures work. In a digital picture, an image is broken up into a bunch of tiny squares (called "pixels") and each pixel is given a code that represents a particular color. The more pixels you have, and the more colors you can generate, the closer the image will look to reality. Eventually, you can add enough pixels and enough colors so that adding any more won't really be noticeable to the naked eye. Once you've reached this point, adding more pixels or colors would be wasteful of computing resources. As an aside, most digital cameras these days have reached that limit (at least for normal sizes of pictures that we see on social media and small printouts), so buying a camera based on just the amount of megapixels it has isn't super smart.

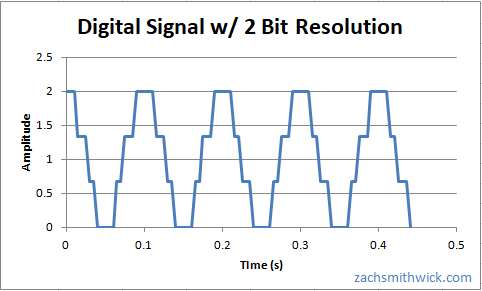

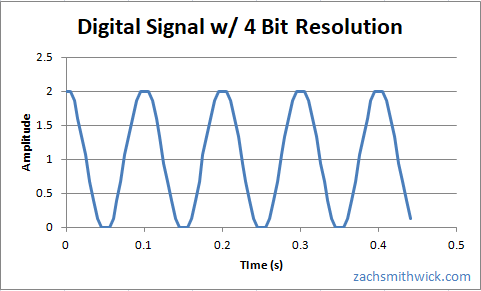

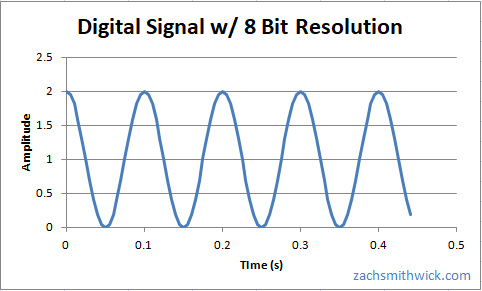

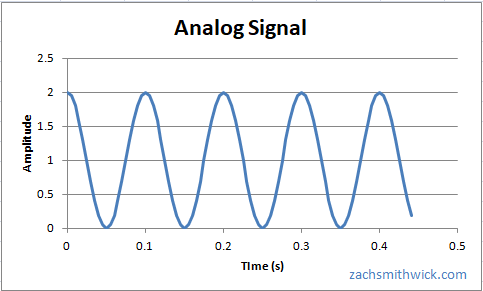

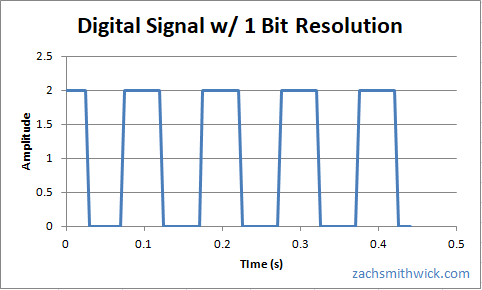

To illustrate different resolutions, below are some examples of digital signals trying to mimic an analog signal:

With 2 bits, we have 4 discrete values our digital signal can take, which makes the resulting waveform slightly better looking

With 4 bits, we have 16 total values that our digital waveform can take, which gives a pretty good representation of our analog waveform

With 8 bits, we have 256 total values, which makes our digital waveform practically indistinguishable from our analog waveform

Which one of the above digital resolutions would be considered "good enough"? Well it depends entirely on the application. For some applications a simple 1-bit resolution may be sufficient, and for others you may need 16, 32, or even 64 bits!

Which One Is Better, Analog or Digital?

Hopefully now you can see that both analog and digital have their pros and cons, so neither one is actually "better". The reason we hear so much about the world "going digital" is that digital implementations of things have become easier with the ubiquity of computers and smart phones, and since the cost of computing devices continues to decline and the capabilities continues to increase, it makes digitally implementing things more and more attractive.

As an example, a 10 Megapixel image (which means 10 million pixels...which is a lot of pixels) would have taken up all of the space on an old computer, thus making it impractical. Therefore, to store many pictures on an old computer would have necessitated reducing the file size of the images to a point where they no longer looked very good. At that time, analog film cameras would have given clearer pictures. However, now that computers have much more memory than they used to, and much faster processors to search through all that memory, having a bunch of huge image files on your computer (or phone) isn't that big of a deal, making the medium much easier and possibly better than analog film cameras.

It's important to note, though, that no matter how much resolution a digital signal has, there will always be some information lost. In many cases this lost information won't be noticeable, but it is lost nonetheless. An analog signal retains all the information of the signal, and will be the superior choice if the noise can be reduced to a reasonable level.

Analog Will Never Die

Given the nature of the world becoming more and more "digital", announcing that analog will never die seems bold, but it is actually irrefutably true.

The reason it is true is because the world is analog. Nature does not have a source code in a binary format, which means that interfacing with anything in the physical world will necessitate some sort of analog signal conversion. You may think that your phone is a digital device, but that's only partly true. In order to send and receive wireless signals such as those required to make calls, send texts, or connect to Wi-Fi and Bluetooth, an analog signal must be used. This goes for any other sort of wireless technology and anything else that senses physical quantities. Digital can be thought of as an artificial medium, and getting things into a digital format involves some manipulation of an analog signal.

In fact, analog may very well make a comeback one day. The reason for this is simple: digital sometimes causes more problems than it solves. While it can offer some neat processing techniques and is very malleable, digital can also be very power-hungry, can generate unwanted signals when it is converted back to analog, and is sometimes slower. For these reasons, researchers continue to experiment with building hybrid-analog integrated circuits that seek to make calculations that are nearly as accurate as an equivalent digital system, but also consume much less power and can process much faster. Power savings are especially important in embedded systems (like your cell phone) since they simultaneously increase battery life and reduce heat dissipation (heat is the enemy of electronics).

Conclusion

Analog and digital signals both have their place in various applications. While it may seem like the world is going "all-digital", analog will never fully leave us...unless of course we get plugged into The Matrix and live our entire lives as a computer simulation...

Interesting! Hope analog t.v.'s come back for Asteroids!

I sure do miss the old telephones we rented from Ma Bell. They were SOLID. If you threw one down the stairs, the person was still on the line when it hit bottom. If you were angry at the person on the other end, and slammed the receiver down, you instantly felt better. Remember the old party lines? That’s a story for another day...